Survey by MCI finds that two thirds of Singapore users encountered harmful online content

SURVEY BY MCI FINDS THAT TWO-THIRDS OF SINGAPORE USERS ENCOUNTERED HARMFUL ONLINE CONTENT; NEARLY HALF TOOK NO ACTION AGAINST SUCH CONTENT

-

Two-thirds of respondents encountered harmful online content; half of the parents surveyed reported that their children encountered harmful online content

-

Among those who encountered harmful online content, only half took action to block the content or report to the platform. However, a majority faced issues with the reporting process

-

Gaps remained in usage of privacy tools and child safety tools

-

MCI will continue to work with government agencies, industry partners and the community to encourage the public to utilise available safety tools and report harmful online content to create a safer cyberspace for all

-

A Ministry of Communications and Information (MCI) survey found that among Singapore users who had encountered harmful online content, around half of them had ignored such content instead of taking action, which can include reporting that to online platforms or blocking the offending content or account.

-

The survey was conducted online in May 2023 with 2,106 Singapore users aged 15 years old and above. It sought to understand Singapore users’ experiences with harmful online content, and their usage of online tools to address such content. Where the sample was not representative of the resident population by gender, age, or race, it was weighted accordingly to ensure representativeness. Among the 2,106 respondents, 515 were parents with children aged below 18 years old.

Prevalence and Types of Harmful Online Content

-

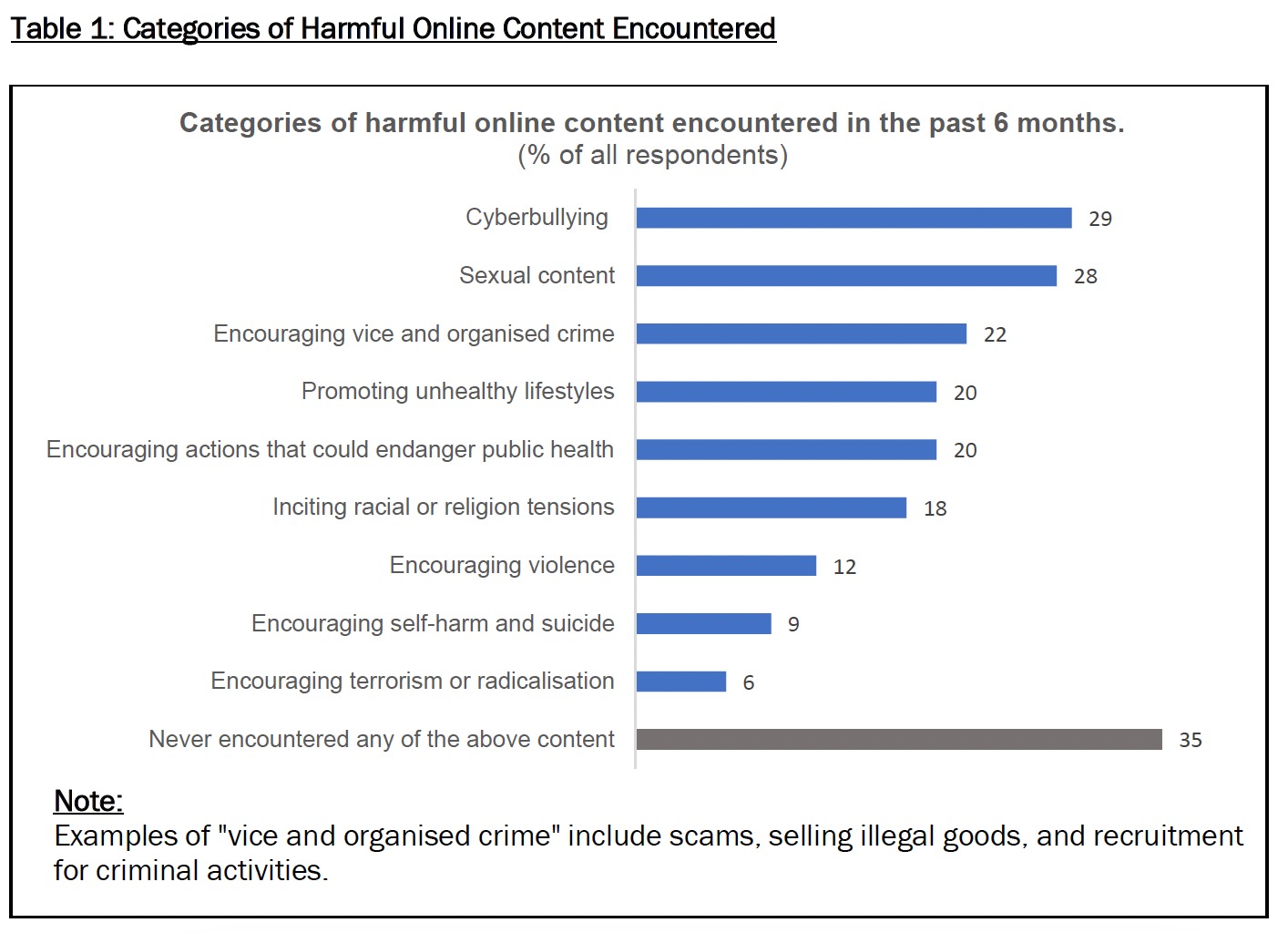

About two-thirds (65%) of Singapore users had encountered harmful online content, with the most common types of content being cyberbullying (29%) and sexual content (28%). Other examples include content which promotes illegal activities (22%), racial or religious disharmony (18%), violence (12%) and self-harm (9%)(see Table 1 below for details). Half (49%) of the parents surveyed also reported that their children encountered harmful online content, particularly sexual content (20%) and cyberbullying (19%). The findings indicated that encounters with such harmful online content, and especially sexual and cyberbullying content, were relatively common among both adults and children in Singapore.

-

Among online services, encounters with harmful online content were most prevalent on social media services 1 (57%). In contrast, encounters via messaging apps (14%), search engines (8%) and emails (6%) were significantly lower.

-

For social media services that have significant reach or impact in Singapore 2, the proportion of active users who had encountered harmful online content on these platforms ranged from 28% to 57%.

More than Three-Quarters Experienced Problems when Reporting Harmful Online Content to Social Media Services

-

Among those who had encountered harmful online content, nearly half (49%) said that they did nothing about it despite the high prevalence of encountering such content. Top reasons cited by respondents for not reporting such content were that it did not occur to them to do so, or that they were unconcerned about the content. For those who took action, one-third (33%) blocked the offending content or account; about one-quarter (26%) reported the content to the platform.

-

Among users who reported harmful online content to the platforms, more than three-quarters (75% to 82%) indicated that they faced issues with the reporting process. The main issues highlighted by users include: 1) the platform did not take down the harmful online content or disable the account responsible for it (27% to 40%), 2) the platform took too long to act (18% to 29%), and 3) the platform did not provide updates on the outcomes of their reports (18% to 24%).

Gaps Remain for Usage of Privacy Tools Despite Awareness of Such Tools

-

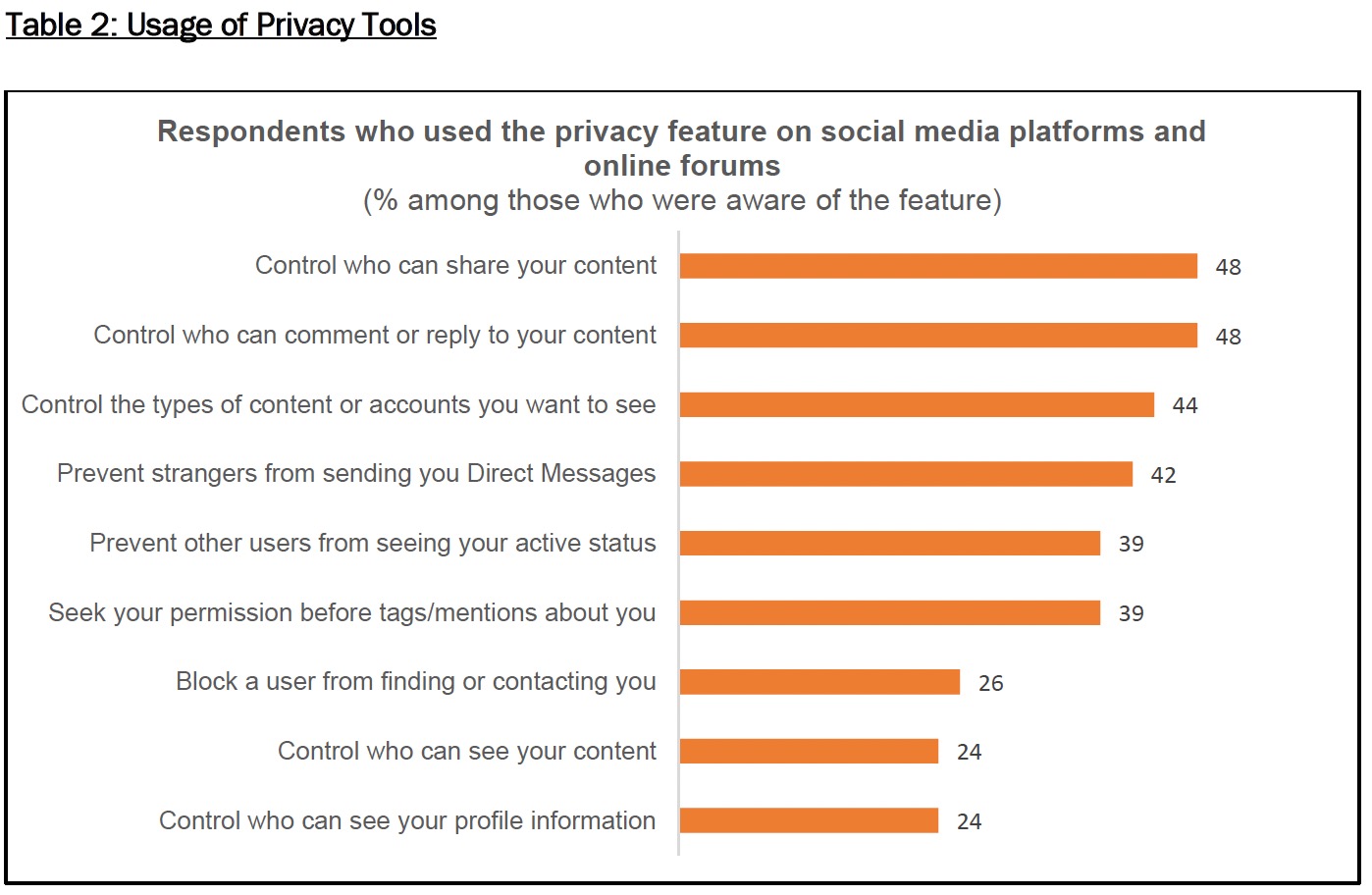

According to the survey, the majority of respondents (88%) knew of at least one privacy tool that they could use on social media services. Awareness was highest towards tools that allowed users to control access to their profile information (56%) or their content (54%), and to block other users from finding or contacting them (52%).

-

Despite having awareness of privacy tools, there remained a gap in respondents’ usage of the tools. For respondents who did not make use of some of the privacy tools that they were aware of, top reasons cited were that they had not encountered issues which required them to use privacy tools (45%), or that they had never thought of doing so (28%). (see Table 2 on the prevalence of the use of various privacy tools).

Moderate Usage of Child Safety Tools by Parents

-

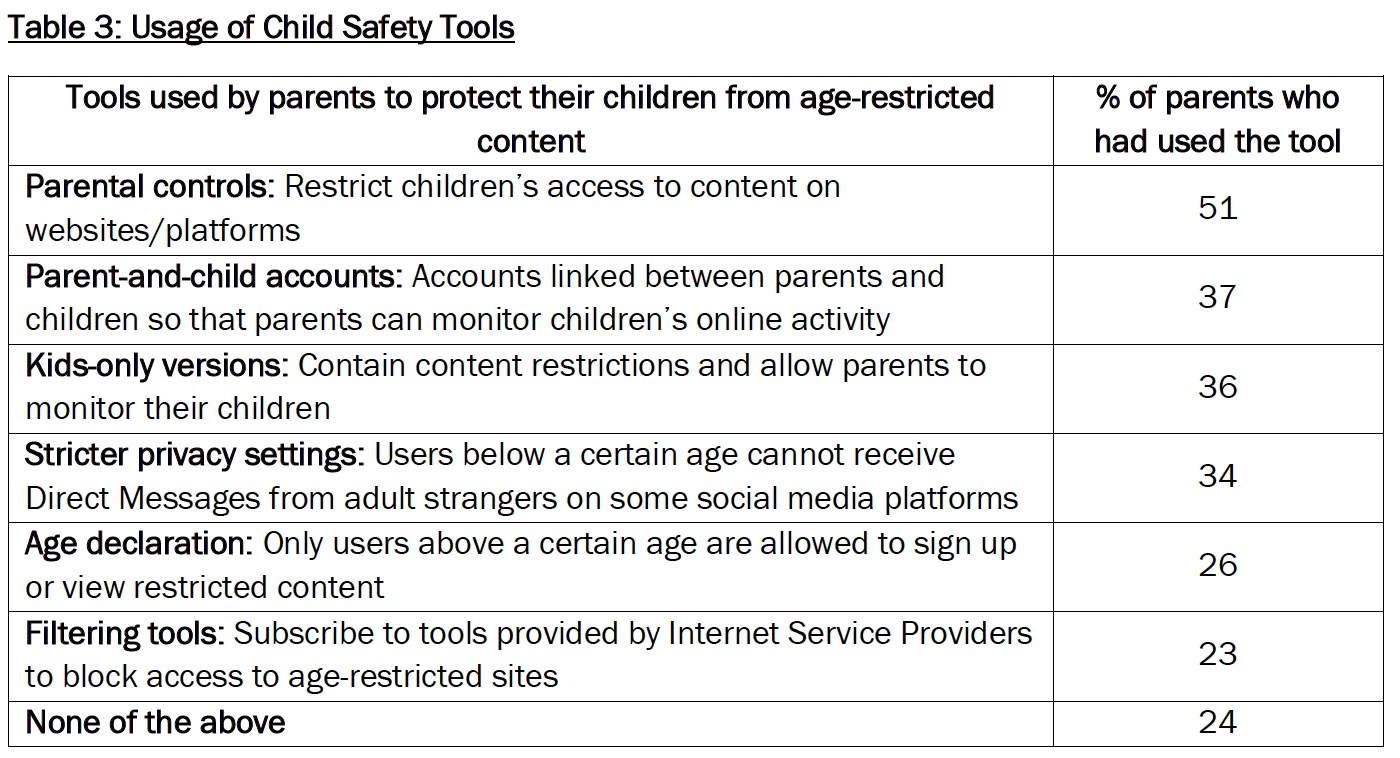

Half (51%) of parents surveyed had used parental controls to restrict the types of content that could be accessed by their children, but usage was lower for other child safety tools.

-

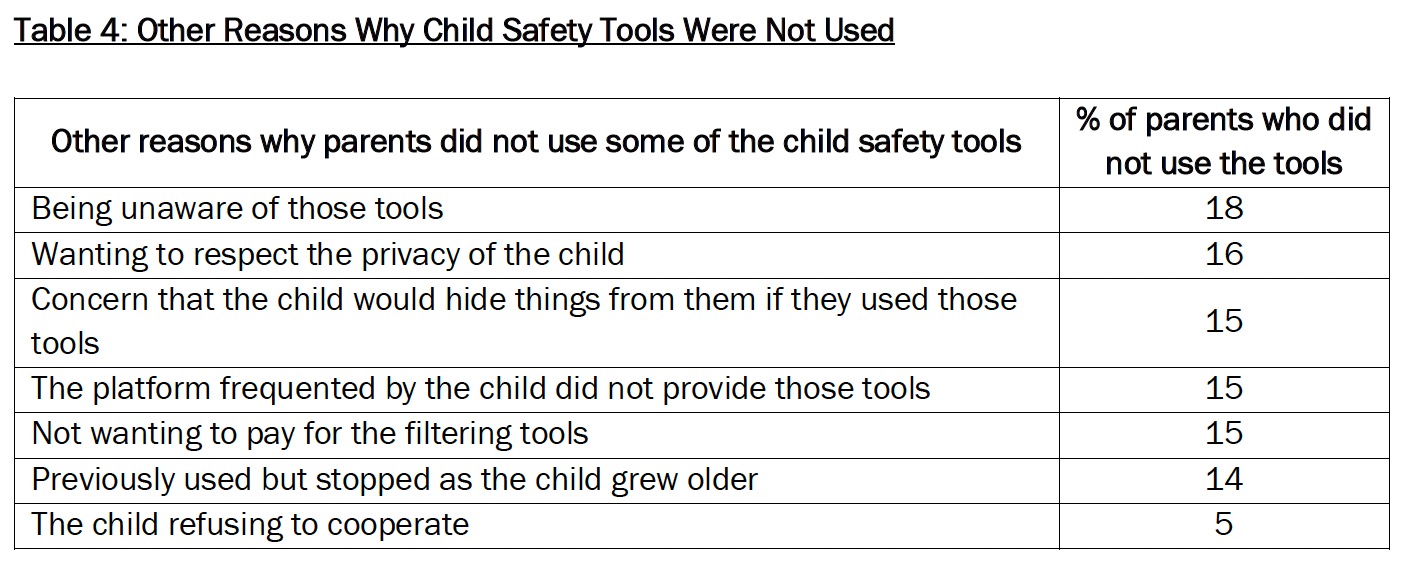

Among parents who did not use some of these tools, 3 in 10 (30%) indicated that their children had limited online access, while 2 in 10 (22%) trusted that their children could handle themselves online. The rest had other concerns on use of these tools. (see Table 4 below on why child safety tools were not used).

Conclusion

-

Harmful online content is a prevailing concern, especially with the pervasive usage of online platforms among Singapore users. The survey findings indicated that although some 65% of Singapore users had encountered harmful online content on social media, nearly 50% of them did not take action against the harmful online content. It did not occur to them to report the harmful online content or they were not concerned about the content. For the other half who decided to block the content or report the harmful online content to social media services, the majority of them had experienced issues with the reporting process.

-

Countering harmful online content requires collective action from the people, private and public (3P) sectors. On the Government’s end, MCI has worked with technology companies such as Google, Meta, ByteDance and X (formerly Twitter) to launch an Online Safety Digital Toolkit 3 in March 2023. This toolkit recommends parental controls, privacy and reporting tools, as well as self-help resources for individuals and parents to manage their own or their child’s safety online. In addition, MCI, Ministry of Education (MOE) and Ministry of Social and Family Development (MSF) are working on a Parents’ Toolbox, which is expected to be launched in phases from early 2024, to empower and equip parents to build up their children’s well-being and resilience, supporting their children’s digital journey and keeping them safe online.

-

Designated social media services also have a role to play in complying with the Code of Practice for Online Safety 4 which was introduced by the Infocomm Media Development Authority (IMDA) in July 2023. The Code requires these services to provide reporting tools which are accessible and easy to use, and to act on user reports in a timely manner. Under the Code, each designated social media service is also required to submit annual online safety reports to help users make an informed choice on the best suited platform to provide a safe user experience.

-

Beyond efforts by government agencies, civil society and end users also have a role to play. For instance, the Media Literacy Council, comprising representatives from 3P, has produced resources to equip parents, youths and members of the public to keep themselves and their loved ones safe online, as well as manage potential online risks. These resources are publicly accessible on www.betterinternet.sg. End users can also play their part to make the online space safer for themselves and the community by reporting harmful online content to social media services. Ultimately, a whole-of-society approach is needed to provide a safe online environment for all Singapore users.

-

Social media services include social media platforms and online forums. ↩

-

These platforms are Facebook, HardwareZone, Instagram, TikTok, X (formerly known as Twitter) and YouTube. They have been designated by the Infocomm Media Development Authority and are required to comply with the Code of Practice for Online Safety which came into effect on 18 July 2023. ↩

-

MLC Tools and resources for managing your own safety online (betterinternet.sg) ↩

-

Details of the Code of Practice for Online Safety can be found on IMDA’s website. ↩